Aug. 21, 2018 | InBrief

Best practices for AR app development with the RealWear HMT-1

Best practices for AR app development with the RealWear HMT-1

Technology, and how we interact with it, is evolving every day. Someday, virtual overlays, like in Minority Report and Terminator, might not be science fiction. Augmented Reality (AR) and Virtual Reality (VR), two relatively new interactive experiences, have had their rises and falls throughout time as new technology became widely available, but not enough to sustain user engagement in the consumer or commercial space. AR and VR are usually shown in consumer products, like video games, but they can provide value in business as well.

AR vs. VR

Before going further, let us explore the fundamental differences between AR and VR. AR focuses on enhancing the user’s immediate real-world environment by overlaying additional information about the environment and the physical objects within it. This allows the user to still interact with their world, but have virtual objects or overlays included.

On the other hand, VR replaces the user’s real-world environment with a “virtual” one. This immerses the user fully into a three-dimensional, simulated environment where they can manipulate virtual objects and perform actions simulating real-life, physical scenarios.

With the shrinking of more powerful technology, wearable devices, like headsets, have become popular for integrating into and enhancing everyday life. Google started the next iteration of AR technology in 2014 when it launched Google Glass, bringing AR to the everyday consumer. Lacking adoption, Glass languished until its cancellation in 2015. Despite this failure, Google still saw huge potential in AR and VR. The Google Glass Enterprise Edition was launched in 2017 to focus on use cases in manufacturing, healthcare, and logistics. Other manufacturers saw the potential for AR and VR technology in business, including Epson and RealWear, and launched their own headsets.

The RealWear HMT-1

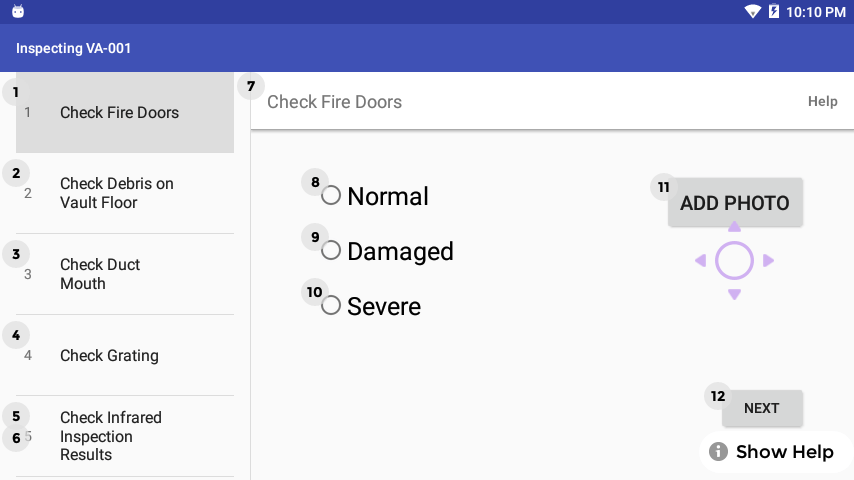

West Monroe acquired a RealWear HMT-1 to focus on using AR in the utility industry. The HMT-1 is a wireless headset with an adjustable screen and the appearance of a 7-inch tablet. Currently workers log or retrieve information on phones or tablets, whereas the HMT-1 is fully voice controlled and faster, longer-lasting, and more durable than a glass display, such as Google Glass or Epson’s Moverio. Developing with the HMT-1 is the same as developing for an Android tablet. It allows developers to use native Java, Cordova, HTML, React Native, Unity, or Xamarin. Additionally, any existing Android app supporting API level 23 can run on the HMT-1 with default voice functionality, which is usable but might require tweaks to get the desired behavior.

Whether you are just starting development for a RealWear HMT-1 or porting an existing application to work on the HMT-1 more efficiently, here are some best practices to make your application turn out as expected, reduce development time, and create a user-friendly experience. Reference the embedded WearML and WearHF directives documentation for information regarding RealWear specific topics.

Build for tablet first, then voice

Think of adding WearHF directives as a nice to have. Voice commands already work out of the box with apps not built with HMT-1 in mind, but there might be some missing functionality you are looking for, or overlays you want to add/remove.

When converting an existing app, run it on an HMT-1 and identify any problem areas. This can include adding more commands for the same action, removing/changing default overlays, adding clickable areas, and scrolling changes. Then apply those changes per layout file. Any views added programmatically can have their content descriptions edited, so when creating and attaching those views, add any needed WearHF directives.

For new apps, add WearHF directives as you create layouts or create programmatic views. They do not have to be comprehensive but adding some directives that go with your intended functionality will alleviate any changes later, such as adding clickable, overlay, and scroll directives.

Build for accessibility

By building for tablet first, you’re already on a path to achieving this. Usually buttons and text are bigger on tablet, but you do not need to worry about button placement in terms of ease of reach or adding padding around clickable text for a bigger hitbox because the HMT-1 is fully voice controlled. Users will mostly use your app for information, so make sure any text is large and navigation is simple and quick.

By default, all currently attached clickable controls will get a circle with a number near them that fades away. Depending on how much clickable content is on screen, you might want to add some directives to remove overlays from views that are obviously clickable, such as buttons with text, items in a list, etc. Having a bunch of overlays display can be confusing and not ideal for quickly looking for information.

Build for a field device

The HMT-1 is like any other Android tablet; the way you interact with it may be different, but most functionality is the same. A major difference is where the HMT-1 is used. This is a field device, so battery life is crucial and it only has a Wi-Fi connection. Build apps to maximize battery life and offline functionality.

- Be conservative with any background tasks and services. Determining the user’s location should execute only when needed and then immediate cancel. Polling or synchronizing of data should change to manual reloads to maximize the usability of the device

- Build an app thinking of offline first so implementing caching and handling a loss of connection or a weak connection properly is thought about and handled appropriately to maintain app functionality

We also learned some painful lessons while developing these practices. Hopefully, they can help you solve similar problems you encounter along the way.

Do not depend on Google Play services

Having access to Google Play services would be nice with the Play Store, and service integration with location, Maps, Drive, and any apps using Play Services. However, the HMT-1 does not come with Google Play services. Manually installing the Play Service and related services for the Play Store is possible but our experience was disrupted when voice commands no longer worked. Uninstalling these services restored default functionality.

Do not configure views in onCreateView

While developing a native app with support Fragments, we saw strange behavior with WearHF when setting up the views within onCreateView. Moving all this setup to onViewCreated fixed all the issues. Behavior included voice commands being recognized but no action being taken, overlays persisting, and the application not responding.

Adding more command utterances removes expected behavior

When adding the directive “hf_commands:” most, if not all, other directives are ignored and the overlay is hidden. Adding a directive for a persisting overlay, changing the overlay text, dictation, and so on, are not reflected. To work around this, we made the single command phrase as generic as possible, so it would be contained within most ways a person would try to activate it. An example is making the command “Search” so that phrases like “Start search,” “Search items,” etc. still trigger the action.

Broadcasting results replaces default behavior with custom handling

When adding the directive “hf_broadcast_results,” all voice commands are broadcasted, so registering a receiver with the correct intent filter will capture all voice commands. This is key because adding this directive on one view will make all voice commands broadcast while this view is displayed, meaning you will need to handle all commands manually, like clicking buttons.

Having this broadcast is useful for commands that do not have such a direct function, for example, an app-wide command to go back to a main screen, but the usefulness wanes with all the manual handling needed. To achieve the intended effect of an app-wide command in native development, we instead put the voice command on the Activity’s layout, hooked up to an onClick, so it is present when any Fragment displays, therefore allowing the command to always be active.

We established these practices and lessons learned while developing apps to handle inspections of utility structures, like maintenance holes, vaults, and transformers, and information lookup on nearby power lines.

When creating your own app for the HMT-1, remember this information to avoid the same development pitfalls and create an ideal user experience.

.jpg?cx=0.5&cy=0.5&cw=910&ch=947&hash=D82C31B87A38F6B94D04577BA76A2109)